Only weeks after Figure.ai announced ending its collaboration deal with OpenAI, the Silicon Valley startup has announced Helix – a commercial-ready, AI "hive-mind" humanoid robot that can do almost anything you tell it to.

Figure has made headlines in the past with its Figure 01 humanoid robot. The company is now on version 02 of its premiere robot, however, it's received more than just a few design changes: it's been given an entirely new AI brain called Helix VLA.

It's not just any ordinary AI either. Helix is the very first of its kind to be put into a humanoid robot. It's a generalist Vision-Language-Action model. The keyword being "generalist." It can see the world around it, understand natural language, interact with the real world, and it can learn anything.

Unlike most AI models that can require 1,000s of hours of training or hours of PhD-level manual programming for a single new behavior, Helix can combine its ability to understand semantic knowledge with its vision language model (VLM) and translate that into actions in meat-space.

"Pick up the cassette tape from the pile of stuff over there." What if Helix had never actually seen a cassette tape with its own eyes before (granted, they are pretty rare these days)? Combining the general knowledge that large language models (LLM) like ChatGPT have with Figure's own VLM, Figure can identify and pick out the cassette. It's unknown whether it'll appreciate Michael Jackson's Thriller as much as we did though.

My team has been working for a year trying to solve intelligence for the home

— Corey Lynch (@coreylynch) February 20, 2025

Meet Helix 🧬: the first Humanoid Vision-Language-Action model

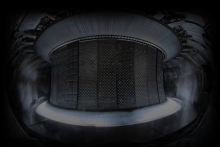

This is one Helix neural network running on 2 Figure robots at once

They've never seen any of these items beforepic.twitter.com/Y6IbXMEvph

It gets better. Helix has the ability to span two robots simultaneously and work collaboratively with both. And I don't mean simply two bots digging through a pile of stuff to find Michael Bolton's greatest hits. Each robot has two GPUs built inside to handle high-level latent planning at 7-9 Hz (System 2) and low-level control at 200 Hz (System 1), meaning System 2 can really think about something while System 1 can act on whatever S2 has already created a plan of action for.

Running at 200 Hz means the S1 bot can take quick physical action as its actions have already been planned for it by S2.

7-9 Hz means 7-9 times per second, which is no slouch, but it leaves the S2 bot enough time to really deep-think. After all, the human expressions have always been "two heads are better than one," and "let's get another set of eyes on it," etc. Except, Helix is a single AI brain controlling two bots simultaneously.

Figure and OpenAI had been collaborating for about a year before Figure.ai founder, Brett Adcock, decided to pull the plug with a post on X "Figure made a major breakthrough on fully end-to-end robot AI, built entirely in-house. We're excited to show you in the next 30 days something no one has ever seen on a humanoid." It's been 16 days since his February 4th Valentine's Day post ... over-achiever?

Today, I made the decision to leave our Collaboration Agreement with OpenAI

— Brett Adcock (@adcock_brett) February 4, 2025

Figure made a major breakthrough on fully end-to-end robot AI, built entirely in-house

We're excited to show you in the next 30 days something no one has ever seen on a humanoid

Over-achieve indeed. This doesn't feel like just another small step in robotics. This feels more like a giant leap. AI now has a body and can do stuff in the real world.

Figure 01 robots have already demoed the ability to work simple and repeatable tasks in the BMW Manufacturing Co factory in Spartanburg, SC. The Figure 02 robots represent an entirely new generation of capability. And they're commercial-ready, right out of the box, batteries included.

Unlike previous iterations, Helix uses a single set of neural network weights to learn; think "hive-mind." Once a single bot has learned a task, now they all know how to do it. Which plays great for home-integration of the bots.

When you think about it, compared to a well-organized and controlled factory setting, a household is actually quite chaotic and complicated. Dirty laundry on the floor next to the hamper (you know who you are), your kids' foot-murdering Legos strewn about, cleaning supplies under the kitchen sink, fine China in the curio cabinet (what's that!?). The list goes on.

Figure reckons that the 02 can pick up nearly any small household object even if it's never seen it before. The Figure 02 robot has 35 degrees of freedom, including human-like wrists, hands, and fingers. Pairing its generalist knowledge with its vision model allows it to understand abstract concepts. "Pick up the desert item," in a demo, led Figure to pick up a toy cactus that it had never seen before from a pile of random objects on a table in front of it.

This is all absolutely jaw-dropping stuff. The Figure 02 humanoid robot is the closest thing to I,Robot we've seen to date. This marks the beginning of something far more than just "smart machines." Helix bridges a gap between something we control – or at least try to control – on our screens to real-world autonomy and real-world physical actions ... and real-world consequences. It's equally terrifying as it is mesmerizing.

Privacy? Not anymore.

I can't help but wonder ... who controls all this data? Engineers at Figure? Is it hackable (does a bear sleep in the woods?) and is some teenager living in mom's basement going to start sending ransomware out to every owner? Are trade secrets going to be revealed from factory worker-bots? Corporate sabotage is a real thing, and I really want to know what the supposed 23 flavors found in Dr Pepper are ...

The whole hive-mind concept means that on a server somewhere, there is a complete, highly detailed walk-through of your house. Figure knows your entire family, what's in your sock drawer and your nightstand. It knows the exact dollar amount of cash stuffed in your mattress. And if you're not careful, it might even know what you look like in your birthday suit.

Source: Figure.ai